Most organizations today recognize the potential of AI to improve decision-making. Many have experimented with large language models (LLMs) and chatbot-style interfaces in the hope of unlocking value from their internal knowledge. Yet despite significant investment, the results have often fallen short.

A recent MIT study highlights what many teams are experiencing first-hand: while LLMs are powerful, most enterprise AI initiatives fail to deliver meaningful ROI. The core reason is simple. LLMs are not trained on the knowledge that matters most to your organization—the information locked inside your documents, systems, and experts.

At Geminos, we built KnowledgeWay to solve this problem.

The challenge: making enterprise knowledge usable by AI

LLMs like ChatGPT excel at general reasoning and natural language interaction, but they lack access to organization-specific knowledge. They don’t understand your contracts, operating procedures, engineering manuals, or the informal expertise held by subject matter experts.

To address this, most teams have turned to Retrieval Augmented Generation (RAG). RAG systems ingest internal documents, retrieve relevant text in response to a query, and inject that text into an LLM’s context window so it can generate an answer grounded in internal data.

RAG is a step in the right direction—but at enterprise scale, today’s approaches break down.

Why Vector RAG falls short

The most common RAG implementations rely on vector databases. Documents are split into chunks, embedded into vectors, and retrieved using similarity search.

This works well for simple factual questions over small document collections. But as the corpus grows, several problems emerge:

• Loss of structure and intent

• Poor cross-document reasoning

• Errors amplified by chunking

GraphRAG: an improvement, but not enough

GraphRAG introduces graph structures that connect entities, concepts, and document fragments. While this improves context and reasoning, most GraphRAG systems remain raw, hard to scale, and lack true ontological structure.

A different approach: KnowledgeWay and EKG-RAG

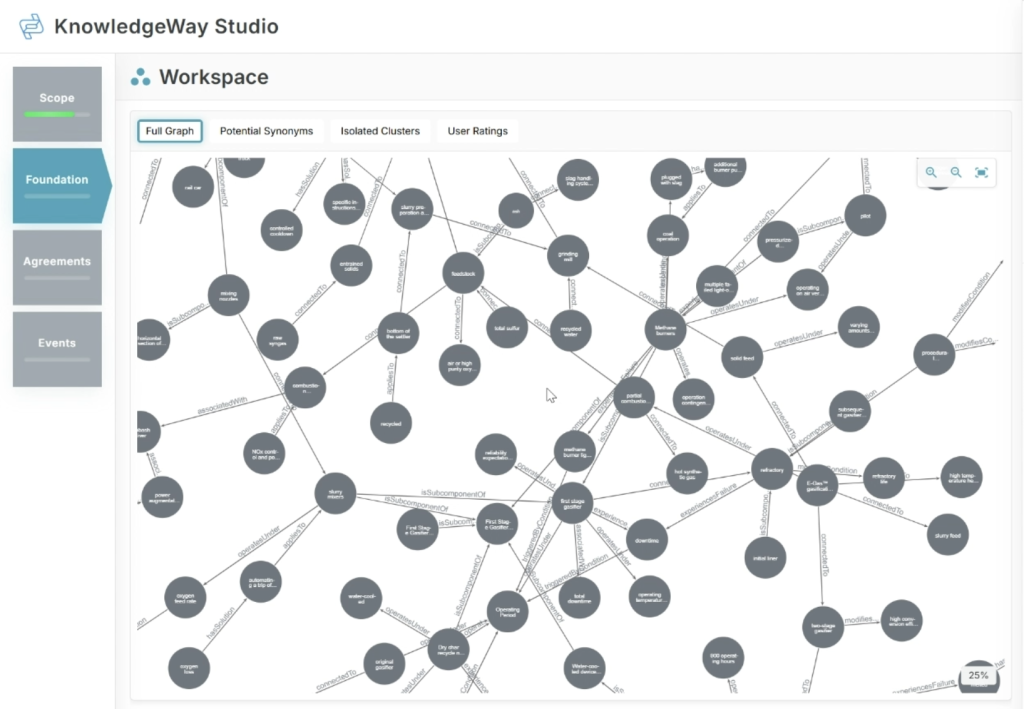

KnowledgeWay moves beyond these limitations by creating true Enterprise Knowledge Graphs (EKGs). Using agent-driven workflows, KnowledgeWay builds and evolves ontologies dynamically, extracting entities, relationships, and supporting text from documents.

The result is a human-readable, curatable knowledge graph that works seamlessly with LLMs.

Knowledge graphs that improve over time

Knowledge engineers can curate the EKG, resolve synonyms, fix inconsistencies, and incorporate SME feedback. User ratings from the Q&A interface further guide continuous improvement.

KnowledgeWay Q&A

KnowledgeWay provides a familiar chatbot interface backed by the enterprise knowledge graph. Answers are more consistent, context-aware, and faithful to organizational reality. The platform works with any public or private LLM, including models deployed behind your firewall.

Software 3.0 in practice

KnowledgeWay exemplifies Software 3.0—applications driven by natural language, agents, and AI working in the background to guide users toward better outcomes.

From knowledge to decisions

KnowledgeWay integrates with Geminos CauseWay to connect organizational knowledge with causal, data-driven decision-making—bridging intuitive understanding and rigorous analysis.

Turning knowledge into a strategic asset

KnowledgeWay combines the strengths of RAG, knowledge graphs, and agent-driven AI to deliver faster time to value and measurable ROI. If you want AI that understands your organization—not just the internet—KnowledgeWay is built for you.